Information Theory

ConceptAbout

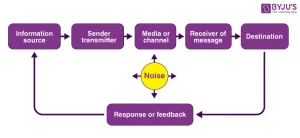

Information Theory is a mathematical framework that quantifies, stores, and communicates information. Developed by Claude Shannon in the 1940s, it forms the basis of modern communication systems. The theory defines information as a measurable quantity, akin to physical entities like mass or energy. It involves encoding messages, transmitting them through channels, and decoding them to recover the original information, despite channel noise. Key concepts include entropy, which measures uncertainty, and channel capacity, the maximum rate at which information can be reliably transmitted. Applications span data compression, error correction, cryptography, and biological systems. Information Theory has been pivotal in the development of digital technologies, including the Internet and mobile phones, by providing a unified approach to communication systems.